Enter Claude Code

Guardrail Garage #002 - A hands-on introduction to Claude Code as an AI pair programmer, with setup tips, workflow patterns, and hard-won lessons from a two-week refactor sprint.

Disclaimer: This post is heavily inspired by the 10XDevs course I’m currently participating in. I considered myself an advanced LLM user, but there are levels to this game. I’ll only scratch the surface and introduce a few concepts here. I highly recommend you dig in further. If you understand Polish - or do not mind a quick copy-and-paste to translate - give these folks a follow:

Przeprogramowani (“reprogrammed”) project

Przemek Smyrdek - LinkedIn

Marcin Czarkowski - LinkedIn

Thanks for waiting two weeks for this one. Work was at an all-time high and I did not muster enough willpower to ship the Claude Code post on time.

No worries. This one goes a step further - meet a powerful tool, Claude Code by Anthropic.

I’ve spent 100+ hours in Claude Code over the last two weeks, racing a delivery deadline, so this post is fresh from the trenches.

Agentic approach to (but not only) software development

Claude Code is essentially an autonomous AI pair programmer. It is not just a chat interface that answers questions - though it can, and it is solid at non-programming tasks too. The real magic happens when you drop it into your repository and ask it to, for example:

understand the codebase

document the codebase

develop new functionalities (or the project from scratch)

evaluate the codebase (is there room for improvement?)

refactor the codebase (actually implement improvements or switch to a better architecture)

you name it

Main advantages of Claude Code:

powered by state-of-the-art large language models

uses familiar CLI commands like grep, write, read, etc.

follows instructions well and lets you manage context with commands like clear and compact (it sometimes compacts context autonomously)

has access to local files in your working directory

for advanced users: you can set up remote command execution - for example, ask Claude to change your codebase from your phone

Obviously, using Claude Code means sending information about your codebase and workflow through Anthropic’s data centres. Unless you’re on a top-secret project, you are probably fine. Usually the data is the secret sauce, not the software - though not always. The older I get, the less room I have for “always” and “never”.

I use iTerm2 on macOS (though Warp is on my to-do list - the go-to terminal in my technobubble lately).

To install Claude Code globally with npm (assuming you have Node.js and npm installed already1):

# Install Claude Code globally

npm install -g @anthropic-ai/claude-codeNow you can cd to your project folder and launch CC with:

# Launch CC

claudeHere are the commands and controls I use most:

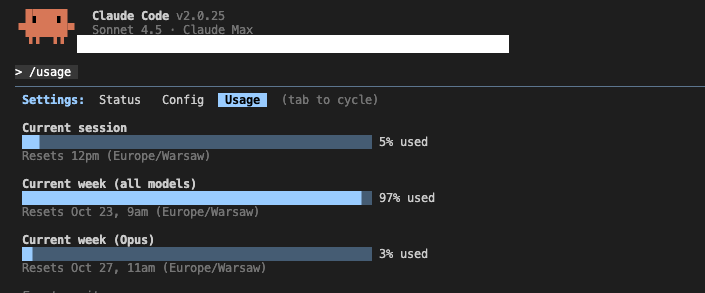

/context- show current context usage as a percentage. If it approaches 100%, consider saving progress and resetting./clear- clear the current context window and start fresh./usage- monitor your usage - especially useful for Max plan subscribers and anyone without unlimited budget.Shift+Tab- switch between plan mode, accept edits mode, and regular mode where CC asks you to accept proposed changes./ide- integrate with your IDE (like Cursor or VS Code) to view diffs proposed by CC. Ensure CC’s CWD matches the IDE’s CWD.@- specify files to load into context, such as rules, logs, or relevant parts of the codebase.ultrathink- enable a maximum token budget for internal reasoning. Good for planning, requirements creation, and solving more complex issues.

When it comes to setup, you should have an “.ai” folder with two files:

Claude.md - a description of your project: overview, tech stack, code style and conventions, development workflow, and testing strategy. Keep it informative but concise. If your Claude.md consumes around 20% of available context, something is off.

Rules.md - documentation rules, governance for AI interaction (for example, always ask me to run tests before applying any new change), code standards, safety practices, etc.

Read more about this on Anthropic’s website here.

There are more advanced topics like hooks, skills, and subagents. I have not used them much yet, but I’ll share notes when I do.

However, here’s what I have learned so far…

Lessons learned - some the hard way

Over the last two weeks I spent 100+ hours doing a refactoring Blitzkrieg on a legacy project. Much of the code did not follow KISS, SOLID, or YAGNI principles2. I will not claim the current version is a perfect example of all of them, but I achieved a lot and I am proud of the result. Keep in mind - my background is more data scientist than software developer ;).

Important things before you start:

Establish a PRD (Product Requirements Document) describing what you want to achieve. You can do this with Claude in planning mode (use

Shift+Tab) andultrathink.Save the PRD in

docs/PRD.md.If the PRD is long, split it into phases. Then create an implementation plan and to-do list for each phase in separate .md files.

Ask CC to follow

@.ai/Claude.mdand@.ai/rules.mdwhile planning - this increases the chance it follows your standards and matches your project context.After planning, execute. My recommendation: never switch on auto-accept edits, and always review changes carefully. Diffs are easier to read in your IDE than in the terminal.

I will not delve into the app details. Think of it as a predictive model factory framework that helps users develop models faster - something along the lines of auto-sklearn.

On day one I wanted to rebuild everything from scratch. That would have been a huge mistake. After 12 hours of vibe-coding with CC and not sweating implementation details, I already had big differences in the first functionality (let’s say “data preparation”). Fortunately, I talked with my mentor the next morning. He sobered me up:

Listen, this is a transformation project with an uncertain outcome - you need to iterate in marginally small increments. And each time, make sure that after each small change current functionalities are maintained and produce exactly the same results.

That was a shock to the system - but I needed it. I ditched my day-one ambitions and started again. Slowly, new functionalities were added, old ones refactored, and observability and user-friendliness went up.

First lesson learned? Skip YOLO mode. Plan, execute meticulously, and work in small, testable increments.

What was the second lesson?

Really pay attention to tests and execution. I thought I had well-defined rules and a solid @Claude.md (loosely following 10xRules.ai from the 10xdevs.pl repo - a perk of being in Przemek’s and Marcin’s course. Contact them and you might find some wiggle room for access).

CC, on different occasions, did things like:

produce code increments as modules that were not executed by the main app, then suggest that because they were not called we could assume they were correct

compare refactored vs original like this:

generate results with the original code

copy results from the original results folder to the refactored results folder

run a comparison on original results vs copied results (the same file, without executing the refactored code once)

suggest this proves the refactor works

Funny? Sure. But if I had not watched CC’s work closely, I would have missed it - and that would have blown up later when the refactor got integrated. I have not eliminated this completely yet, but by working in smaller increments and doing careful test reviews, I kept it in line.

Even with those hiccups, I shipped a lot of functionality in a short time. Super satisfying. I am excited to adopt more 10X rules to boost both quality and speed.

Watch this space - I’ll share more in the coming weeks.

And that’s it for today!

To sum up, if you want to get better at pair programming (or programming, full stop):

Separate planning from execution.

Focus on good planning.

Work in small, testable iterations.

Do not let go of control - set ground rules and check they are followed.

AI can be a powerful ally, but make it follow your lead. Be the leader.

Behind closed doors

Not only did I do this refactor sprint, but I’m also actively participating in the AI Managers 2 cohort. There are business concepts I plan to integrate into my workflow and maybe have AI assistants help me in planning - especially around calculating tangible ROI of AI projects and translating advantages into $.

I had to put other courses on hold for a moment to ship as much as I could and still sleep. But it is done now.

I also ran two rounds of 1-day LLM-enablement training at a scientific institution. I showed how to use LLMs to find and apply new information in practice while keeping a human in the loop and verifying carefully (same vibe as my CC lessons).

It went really well, though my throat is not ready for six hours of speaking with only a short break. I will get there.

More new things coming soon. Stay tuned.

As usual, do not hesitate to reach out if you want to discuss AI ideas or some other form of cooperation:

What’s coming up next week?

I’ll be catching up on the Practical LLM course, so expect some lessons learned on managing RAG and Agents next week.

See you soon!

Footnotes

Wow, that’s a lot of Wikipedia links. I hope that’s not a millennial thing. And if it is, what should I link to - Perplexity search results?